If you are like me, you enjoy discovering new ways to gain insight into what is happening with your code. Especially, when it comes to performance and scalability. And making things as tight and as efficient as possible.

Writing and executing graphics programs for simulations and games can be quite taxing on CPU resources. I say that because I got real concerned with a recent program I wrote called Life which simulates digital cellular life forms that grow, prosper or perish in a colony of peers based on some simple growth rules (for more information, google: game of life wiki conway). Here are sample graphics of a few time slices of an animated "snowflake". Other shapes and life forms are possible.

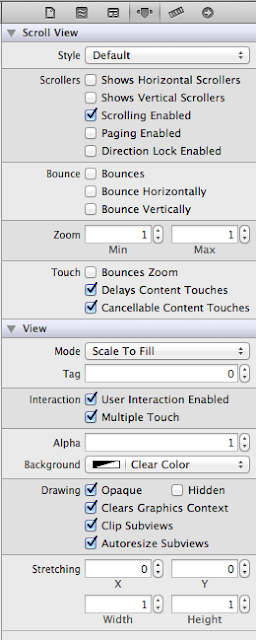

On my first iteration of the code, I wanted to understand why my

program was taxing the CPU at what appeared to be a steady 15%

utilization in the OSX Activity Monitor. I wanted more details on

what was happening.

In the code, I also made the main simulation loop as tight as possible, eliminating any extraneous calls or

assignments, and passing any large data structures to functions by

reference only.

With that accomplished, my investigations led to parts of the code that

were called most often. From there, I would be able to "see" which

routines or calls were taking the most time to execute.

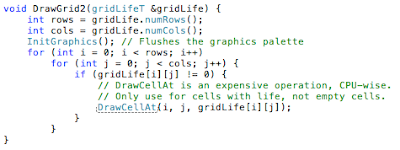

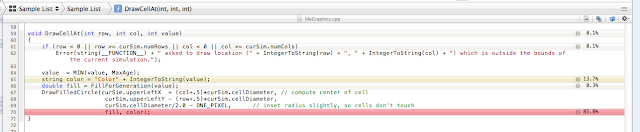

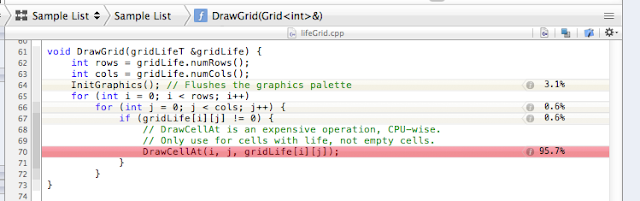

To cut to the chase, I intuited that the call that writes pixels to the graphics palette was exhibiting the most influence on the time profile. In fact, the code, prior to performance enhancement, looked like this:

Obviously, DrawCellAt() is called the most. This is a "free" library function (not a class) (I have access to the source for further inspection).

So what's happening? Prior to this, the grid is continually inspected and updated based on the Life growth rules. After that, the next generation of life is determined and stored in the gridLife variable, then DrawCellAt is called for every cell in the grid.... every cell in the grid.... ouch! Even for cells that are now empty due to a life form that recently died and even for cells that are basically serving background, DrawCellAt() is called. Hmmm... that's expensive, CPU-wise.

So again, what's happening? Well, Here's one way to answer this. I know that the graphics palette is 21 rows x 30 columns or 630 cells and the snowflake is on average 50 cells. Assuming on the next generation, it is also 50 cells, then on average, about ~10-20% of the cells will need updating, either alive or dead, to keep the animation moving.

But if the code is blindly updating all 630 cells, whether it had or has life or

not, there are slim chances to achieve any efficiency. What is needed is some

way to "compress" the data that is written to the graphics palette.

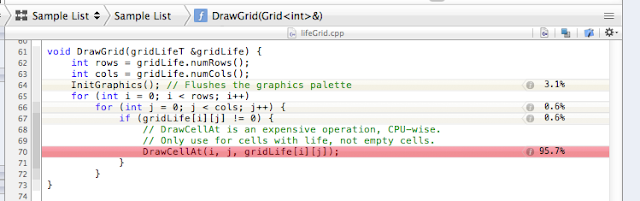

So I rewrote the routine to flush the graphics palette and tighten the loop to only call DrawCellAt() when there is "life" in the next-generation cell:

The result? A 5x to 7x improvement in CPU % utilization (down to ~3%). That's pretty good.

So what's really happening?

Enter Xcode Profiling!

Profiling is an Xcode compiler option can be invoked while developing, executing and testing code. In this case, I want to "see" the difference between versions 1 and 2 of DrawGrid in terms of CPU resource utilization within the code itself.

Here is the quick HOW-TO procedure:

If you want to follow along: you can get the source here and load into Xcode:

https://github.com/vincemansel/Life

(Or just git the repo and open the

Instruments4.trace file. You will need to change Search Paths in Preferences to point to where the source files live.)

- In Xcode, select the Profile command (Hold the mouse down momentarily on the Run command and several options will appear. Select Profile.

- This launches "Instruments" which provides profile options. Choose Time Profiler.

- This starts "Life", the program to profile. Enter parameters into Life to execute the simulation. (I simulated the snowflake file on level 2, Plateau mode).

- I am interested in writes to the graphics palette. Capture about 10 seconds of data and kill the process, or stop Recording data.

- Do this for both versions of the program (i.e. one with DrawGrid1 and one with DrawGrid2)

- Analyze the data as follows:

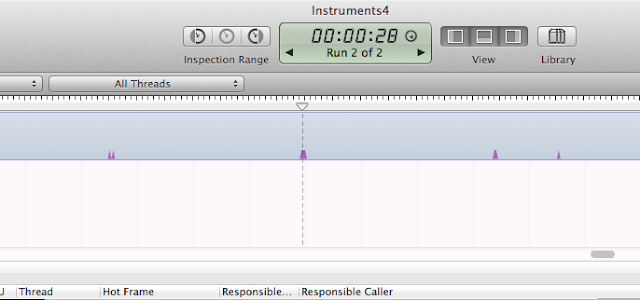

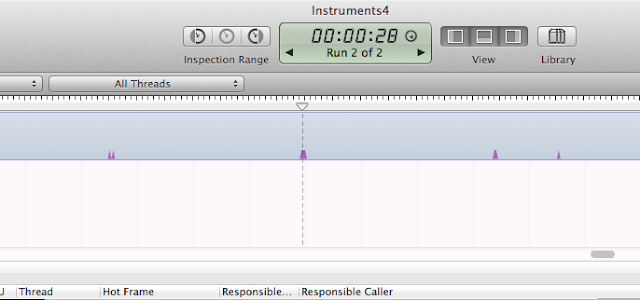

The Instrument will show a time graph of the program CPU usage by default. The time graph is 100ms/pixel.

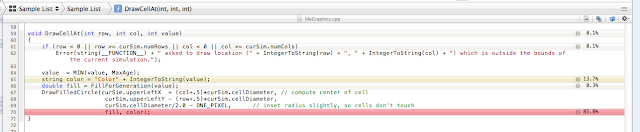

I can also open up the Extended Detail panel on the right side of the window. This shows a stack trace for DrawGrid() and beyond.

Since Time Profiler is compressing the time line, expand it so it looks like this (~500us/pixel).

In the Sample List, select a sample to hightlight. Note it is highlighted where the "scrubber" or time-marker is currently placed. Use the up or down arrow to scroll through the timeline, jumping from sample to sample. Any sample will do that indicates the code is currently running in the Life process.

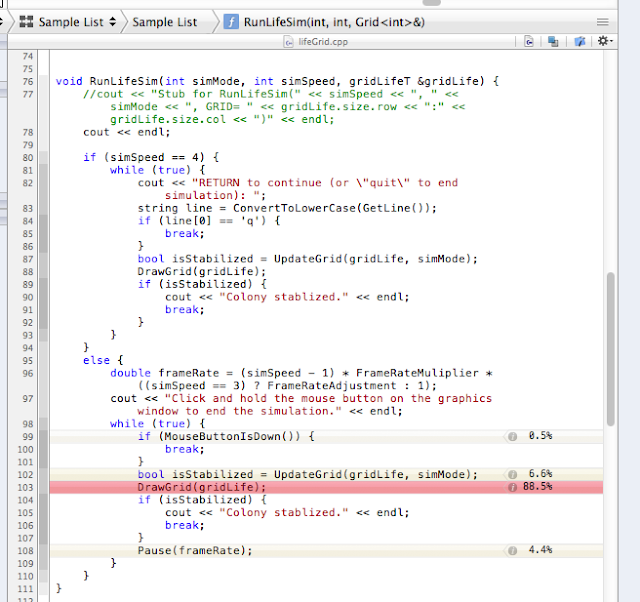

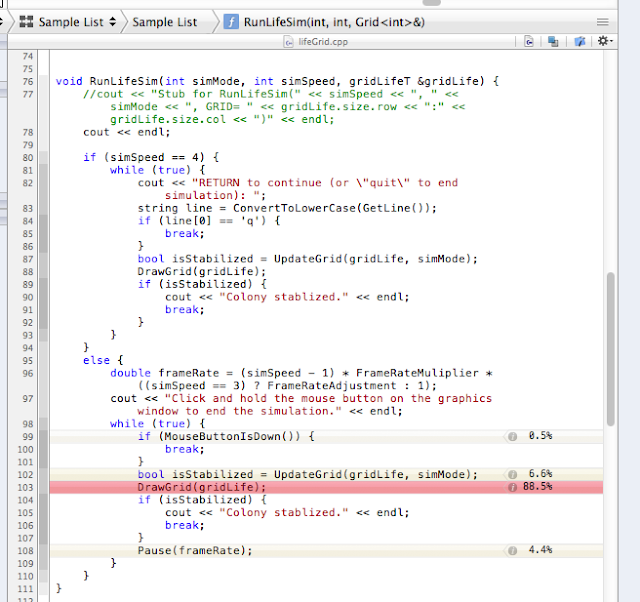

In the Extended Detail panel, I see the call list that I am

interested in. So I first double-click RunLifeSim() to determine how much

"time" the program is spending in DrawGrid().

This opens a window directly into the code:

I see that DrawGrid occupies over 99% of the resources in this function.

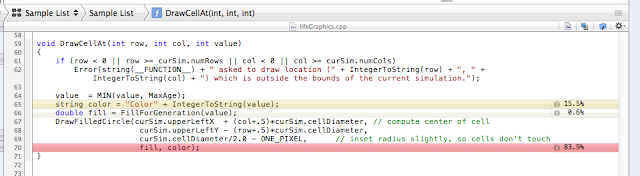

I can go further.... click back to the SampleList.... double-click on DrawGrid() in the Extended Detail panel.

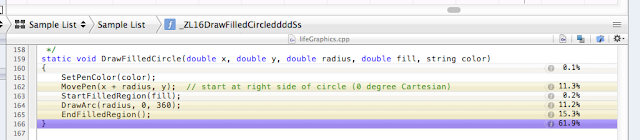

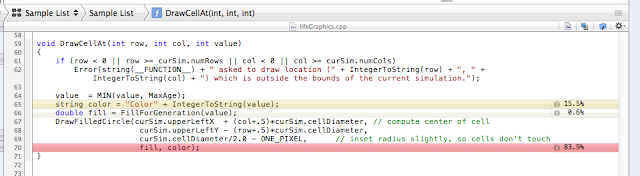

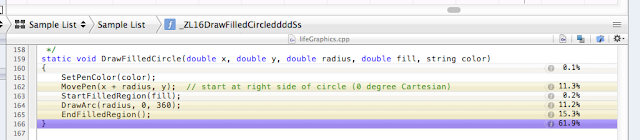

Aha, 100%. This is making sense. But I want to go further.... open DrawCellAt()... open DrawFilledCircle()...

|

| DrawCellAt() - Hmmm... |

This gives me an indication of what is taking up time to do the work. It does not tell me how or what to fix but insight is gained into the inner workings of the code...

|

| DrawFilledCircle() - Too deep, but interesting... |

Now, what about DrawGrid2. Where is the improvement? I want to "see" it. Check it out:

|

| Every update interval results in a smaller burst of CPU usage. |

|

| RunLifeSim() - I see improvement!!! |

|

| DrawGrid() - I see a little bit here too! |

|

| DrawCellAt2() - This works on a cell by cell basis. No improvement here. |

Reducing the number of calls to DrawCellAt() (or compressing the amount of graphics data written) reduces the amount of

resources that DrawGrid() is taking as a percentage of the overall program.

Also note the reduction in time that the code is taking every update interval (0.5 seconds). I reduced the time scale down to 50 us/pixel, For DrawGrid1, the "time burst" is 14.160 msec.

For DrawGrid2, it is 0.954 msec!

A 14x improvement!!!

Awesome!

Recap: 14x improvement in the code. 5x - 7x improvement at the process level. Done.

That was fun!

Code for Life and the Instruments trace file is available here:

https://github.com/vincemansel/Life

Life was developed and executed on a MacPro, 2 x 2.8 GHz Quad-Core Intel Xeon with 8GB 800MHz DDR2 F8-DIMM and OSX Version 10.7.1 with Xcode Version 4.1 Build 4B110.

Acknowledgements to the folks over at Stanford Computer Science (SEE) for the LIfeGraphics resources and the underlying utility programs. You can find them on ItunesU.

Send me a comment. Or follow my links to get in touch.

Thanks, Vince